Grading takes time...

Teachers are overwhelmed. Between lessons, emails,and school duties, grading often consumes their evenings and weekends.

AI is changing that. A Gallup survey shows teachers who use AI save up to 6 hours a week — nearly 1.5 months per school year — helping prevent burnout. And while teachers are just beginning to explore AI in their work, students are already embracing it at a staggering pace. Reports suggest that more than 8 in 10 students globally use AI regularly for homework, essays, and studying.

The AI in education market is booming — from $5.9B in 2024 to a projected $32.3B by 2030. This isn’t a trend; it’s the futureof assessment. Choosing the right grading tool matters. The right solution saves hours, improves feedback consistency, and supports learning. The wrong one creates extra work and security risks.

That’s why we’ve created this guide to help you understand not only why you need these tools, but what to look for. In this guide, you’ll find:

- Questions to ask before investing in an AI grading tool

- Why each one matters for your teachers, students, and district

- What to look for in an AI grading tool and what to avoid

- Tips to help you have a smooth rollout from day one

Question 1 to Ask: Does the tool natively integrate with our Learning Management System (LMS)?

If your teachers are already spending most of their day inside Google Classroom, Canvas, or others, the last thing they want is to add yet another disconnected tool to their workload.

A truly effective grading tool should meet teachers where teachers already are — inside their LMS.

94% of school districts already use an LMS (EdWeek, 2022), so integration with them for AI tools is not an option but a requirement. Proper AI grading should work as an extension of your system, not complicate it. The problem arises when, instead of native integrations, middleware is used — third-party connectors that often lead to:

- Delays in syncing assignments or rosters

- Inability to maintain proper version control on student submissions

- Repetitive authentication steps for teachers

- And data sprawl in multiple systems, increasing security and privacy risks

Question 2 to Ask: How accurate and consistent is the grading?

Accuracy is the backbone of any AI grading tool. If the AI can’t match your teacher’s judgment reliably, they will stop using it. Now, there’s actually solid evidence behind the best automated scoring systems.

ETS research has shown that top-tier engines can align with trained human graders at rates around 95 to 97 percent.

That’s promising, but not every tool performs at that level.

Imagine a tool that grades the same essay differently on Monday than it does on Wednesday. Or one that grades a student’s writing more harshly simply because it doesn’t recognize dialect, voice, or nontraditional phrasing. These issues not only frustrate teachers but also erode trust in the system. And once trust is gone, adoption follows.

Question 3 to Ask: Does the tool support your state’s curriculum standards?

Every school district or university has its own standards to follow, whether that’s Common Core, STAAR, B.E.S.T., Regents, AP, IB, or even internal frameworks that are very specific to your institution.

If an AI tool doesn’t line up with those frameworks right away, teachers end up spending extra hours tweaking rubrics, assignments, and assessments just to make it fit. By that point, the whole idea of saving time with automation is lost.

Even without issues with standards, many schools use rubrics that are too vague for AI to interpret. Criteria like “student demonstrates strong reasoning” lack clarity, forcing AI to guess — and guesses lead to inaccurate grading.

Question 4 to Ask: Does the tool include AI writing detection and plagiarism checking?

Why does this matter? Because teachers are finding it increasingly difficult to tell when student work is truly original. That creates an uneven playing field—students who write their own work feel penalized when others rely heavily on AI. And this isn’t just about one assignment. If schools can’t show they understand and manage AI use, it weakens parent trust, makes it harder to hold students accountable, and raises real questions about whether grades still reflect learning.

But here’s the nuance: not all AI use is cheating. Many students use AI for brainstorming, organizing thoughts, or refining grammar—not for generating full essays. If a grading tool treats every instance of AI assistance as plagiarism, it creates unnecessary tension and mistrust between teachers and students. The best tools recognize this balance.

They don’t just throw out scary AI percentages without explanation—they provide clear flags, context, and actionable insights. They show where AI was detected, reference original sources when possible, and empower teachers to have meaningful, informed conversations with students rather than punish them blindly.

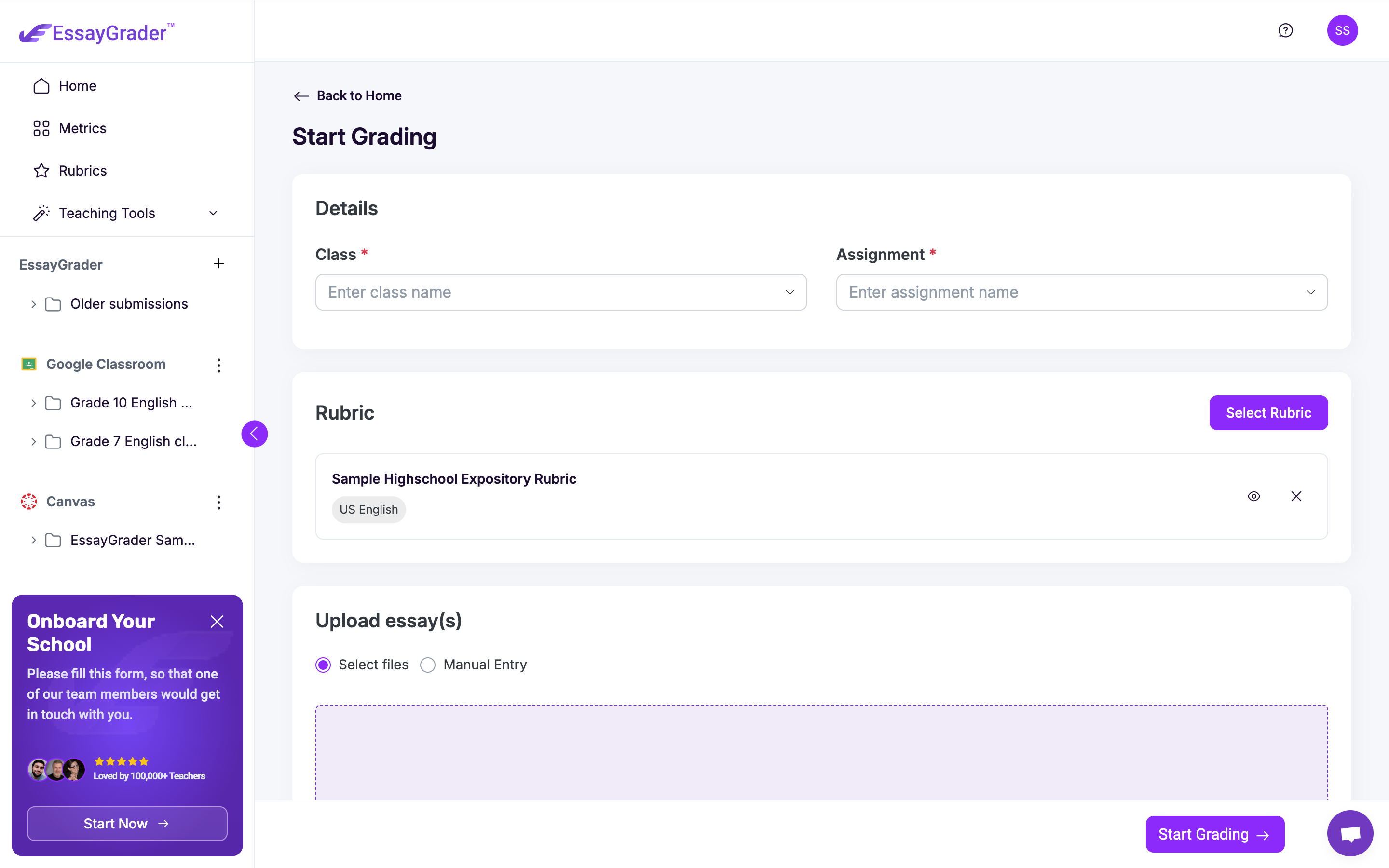

- Let teachers grade a full class set without jumping between multiple screens.

- Allow seamless edits or overrides when AI feedback misses the mark

- Require minimal training to get started - ideally, teachers should be comfortable using the product after one demo.

- Integrate seamlessly with the LMS that is already in use.

Question 5 to Ask: How easy is it to use the AI tool?

Over the past few years, schools have seen an explosion of digital tools. School districts went from using a few hundred EdTech tools in 2018 to more than 2,700 by the 2023/24 school year.

With this boom, it’s obvious teachers already juggle dozens of tools, apps, and logins. That’s why, if a tool feels complicated or slows teachers down, it’s less likely to stick. Interestingly, even with new AI models rolling out, more training opportunities, and a summer to reset, adoption dipped from 33% in December 2024 to 32% in October 2025.

It’s a small shift, but it shows that ease of use really matters. And ease of use isn’t just about a clean design. It’s about how well the tool has built flows that resemble real life grading workflows for your teachers.

A truly teacher-friendly AI grading tool should:

Question 6 to Ask: Is the AI tool trained using your students’ work and assignments?

Some companies quietly use student writing to train their systems. At first glance, that might not sound like a big deal. But once that work is fed into someone else’s model, the school has no control over where it goes or how it might show up later.

That’s a problem for a few reasons.

Student privacy and consent: Depending on the assignment, some student essays can include personal details about their families, their lives, and even their health. That information was only ever meant for their teacher’s eyes, and not so that it could live in a vendor’s database.

Fairness and Ethics: And beyond all that, there’s trust. If parents find out their child’s writing is being stored or reused in ways they didn’t agree to, it puts the whole district in a bad light. Once that trust is broken, it’s tough to get it back.

Trust and Transparency: It also raises a fairness issue: why should students’ work be used to make a vendor’s product better without their permission?

Question 7 to Ask: Is the vendor truly secure and compliant - and will they sign a Data Privacy Agreement (DPA)?

Protecting student and staff data isn’t just about stopping hackers—it’s about ensuring vendors follow the same laws and security standards your school must follow. Cyberattacks on schools are rising, but the bigger risk is vendors who can’t prove their data practices are safe. Districts should go beyond marketing claims and require proof of compliance with privacy laws (FERPA, COPPA, PIPEDA, or state acts) and security certifications like SOC 2 or NIST.

These certifications are independent audits that verify a vendor’s systems are secure. A trustworthy vendor should also sign your Data Privacy Agreement (DPA), clearly defining how data is collected, used, stored, and deleted. If a vendor refuses, that’s a major red flag.

Question 8 to Ask: Can the vendor train your staff? What does onboarding look like?

The best tool in the world won’t matter if teachers don’t feel comfortable using it.

Training is what makes or breaks adoption. In fact, just last year, 48% of districts reported they had trained teachers on AI use — up from just 23% in 2024.

That’s progress, but it also means more than half of districts still haven’t given teachers any actual structured support to help navigate these tools.

Training also can’t be a one-time demo. Just like students, each teacher has a different way they like to learn.

Consider live sessions when they’re starting out, recordings they can watch later, and vendors with a support team they can count on and reach out to when questions come up during the year. It also helps when districts connect this training to professional development credits, so teachers see it as part of their growth and not just another thing on their plate.

About EssayGrader

The #1 AI grading tool used by 100,000+ teachers.

In the ever-demanding world of teaching, where teachers are stretched thin, EssayGrader stands as a beacon of relief, driven by a singular vision: lightening the grading burden for educators.

We know the challenges you face — like staring down a pile of 200 essays with just one of you to manage them all. It’s overwhelming, and we understand that better than anyone.

That’s why we built EssayGrader: It’s a straightforward, powerful tool that uses AI to take the load off your shoulders and completely change the way you approach grading.

Reach out to us at hello@essaygrader.ai or visit www.essaygrader.ai for more information.

.avif)

.avif)