We recently engaged in a major project here at EssayGrader to expand our rubric library to include assessment tools from across the country. We noticed something surprising when dealing with writing rubrics from different states: many of them look almost identical.

This is an experience similar to that of many teachers as they encounter a variety of state assessment tools. In particular, educators familiar with Smarter Balanced states recognize the same core Performance Task Writing Rubrics—complete with the same trait names, scoring descriptions, and grade-band structures—appearing in state documents from California to Vermont, from Hawaii to Delaware.

This consistency is not an accident.

It reflects the influence of assessment consortia—multi-state groups that develop shared academic standards, assessments, and scoring tools. While individual states customize aspects of their assessment systems, many still rely on consortium-built rubrics that ensure consistency, comparability, and instructional alignment across jurisdictions.

In this article, we explore:

- What assessment consortia are

- The four major consortia represented in EssayGrader’s rubric library

- Why their rubrics appear across so many states

- How states rebrand, update, or adapt consortium rubrics

- What this means for teachers—and how EssayGrader helps

Whether you're a teacher trying to understand your state’s scoring system or you’re curious why your colleague across the country uses the same rubric you do, this deep dive will clarify how we got here.

1. What Is an Assessment Consortium?

An assessment consortium is a partnership among multiple states that collaborate to design standardized tests, scoring guides, and performance expectations.

By pooling resources and expertise, consortia can:

- Create high-quality assessments aligned to shared standards

- Support cross-state comparability

- Reduce costs compared to each state developing tests independently

- Provide consistent scoring tools (including rubrics) across member states

Most large consortia emerged during the rollout of the Common Core State Standards (CCSS), when states sought aligned, rigorous assessments that measured writing, reading, and mathematical reasoning more effectively than older state tests.

Not all consortia lasted in their original form—but their rubrics endure.

Why Consortia Formed During the Common Core Era

In the years leading up to the Common Core, more than half of U.S. states faced rising concerns from colleges and employers that high school graduates were not prepared for post-secondary work. States were also spending large sums independently developing tests that often varied wildly in quality and rigor.

When the Common Core was adopted, it created—for the first time—a shared set of expectations for student learning across states. This opened the door for consortia: groups of states that could pool resources to build higher-quality assessments than any one state could produce alone. The result was a wave of collaboration aimed at improving comparability, increasing rigor, and modernizing the role of assessment in instruction.

2. The Major Consortia That Shape Today’s Writing Rubrics

While no single consortium defines writing assessment nationwide, a relatively small group has had an outsized influence on how writing is evaluated in K–12 classrooms today.

Some of these influences are indirect. For example, WIDA Consortium shapes writing expectations for multilingual learners across most U.S. states, while alternate-assessment consortia such as Dynamic Learning Maps Consortium support students with significant cognitive disabilities using simplified but structured writing frameworks. Vendor-developed systems like ACT have also contributed language and scoring conventions that appear in some state rubrics.

However, when it comes to the core writing rubrics used in general K–12 accountability assessments, a smaller set of consortia has been especially influential.

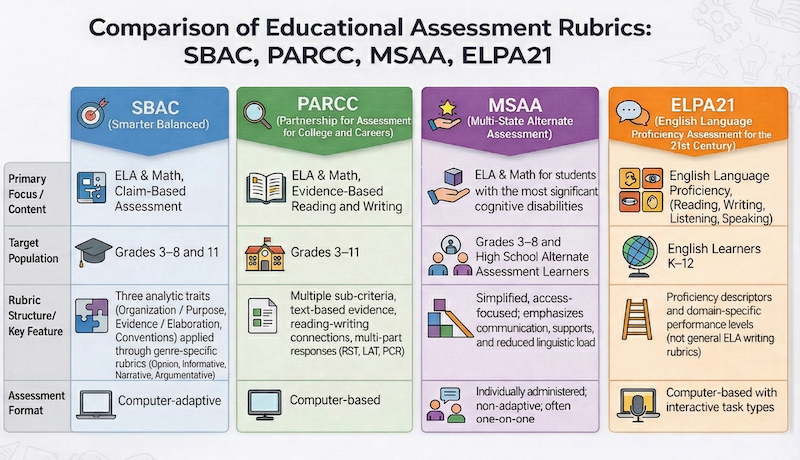

EssayGrader’s rubric library includes materials from four of the most influential assessment consortia:

- Smarter Balanced Assessment Consortium (SBAC)

- PARCC (Partnership for Assessment of Readiness for College and Careers)

- Multi-State Alternate Assessment (MSAA)

- ELPA21 (English Language Proficiency Assessment)

Each of these consortia plays a different role in shaping the writing expectations teachers work with today. Some focus on general education writing aligned to college- and career-readiness standards, while others support alternate assessments or English language proficiency. Together, they help explain why writing rubrics across states often share common structures, language, and scoring criteria—even when assessments are administered independently.

Let’s take a closer look at these four.

3. Smarter Balanced Assessment Consortium (SBAC)

The most widespread and influential writing rubrics in the U.S.

SBAC is by far the most influential consortium when it comes to writing rubric alignment. Its Performance Task Writing Rubrics—covering Opinion, Informative/Explanatory, Narrative (two grade bands), and Argumentative writing—have become standard tools across 15+ states.

When the Common Core State Standards (CCSS) were introduced, SBAC became one of two major national consortia charged with designing assessments that would move beyond multiple-choice testing. SBAC’s development was rooted in several guiding principles:

- Assessing deeper learning. SBAC believed students needed to demonstrate reasoning, analysis, and evidence use—not just recall. This is why SBAC rubrics emphasize elaboration, purpose, and command of evidence.

- Performance tasks as authentic measures. The consortium intentionally designed extended writing tasks that mimic real-world literacy challenges, allowing students to engage in research, synthesis, and sustained argumentation.

- Claim-based structure. Instead of testing isolated standards, SBAC organized assessments around broad “claims” about what students should know and be able to do. The writing rubrics reflect these claims by evaluating complex, integrated skills.

- Equity through accessibility. Universal Design for Learning (UDL), accommodations, and adaptive testing were embedded from the start to increase fairness across student populations.

- Consistency across states. A core purpose of SBAC was to provide comparable expectations nationwide, ensuring a Washington 7th grader was held to the same writing standards as a Nevada or Vermont 7th grader.

The resulting rubric structure—three holistic traits paired with genre-specific rubrics—was designed to support teachers in understanding not just what students wrote but how well they communicated, reasoned, and organized their ideas.

The Vision Behind SBAC: Deeper Learning Through Authentic Assessment

When SBAC was created, its designers set out to build an assessment system that moved beyond bubble sheets and toward deeper demonstrations of learning.

The consortium emerged during the rollout of the Common Core State Standards, but its philosophy was shaped more by cognitive science and instructional design than by politics. SBAC assessments were built around broad learning “claims” intended to capture real-world literacy skills such as reasoning, organization, and evidence use.

Performance tasks were designed to feel authentic rather than formulaic, and the rubrics followed those same principles: three holistic traits that measure a student’s ability to communicate purposefully and coherently.

SBAC also placed accessibility at the center of its work, embedding universal design principles and adaptive testing to ensure that students across the achievement spectrum could demonstrate what they know. This combination of depth, clarity, and equity-minded design is part of why SBAC’s writing rubrics remain influential even in states that no longer participate in the consortium.

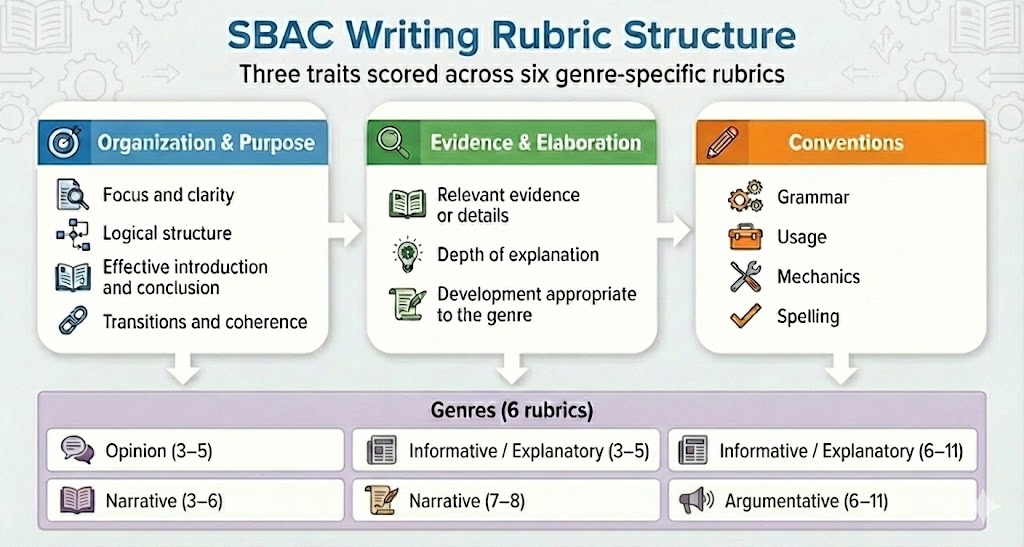

SBAC’s Writing Rubric Structure

All SBAC states use the same six rubrics:

- Opinion (Grades 3–5)

- Informative/Explanatory (Grades 3–5)

- Narrative (Grades 3–6)

- Narrative (Grades 7–8)

- Argumentative (Grade 6)

- Argumentative (Grades 7–11)

Each rubric evaluates the same three traits:

- Organization and Purpose

- Evidence and Elaboration

- Conventions

And each trait uses the same point scale across all states.

Why SBAC Chose Holistic Traits Instead of Analytic Subscores

Unlike PARCC and many state-developed rubrics, SBAC intentionally chose a holistic scoring model within each trait. Rather than breaking writing into dozens of micro-skills, SBAC wanted scorers to make professional judgments about how well a student’s writing achieved its purpose.

This approach reduces penalization for isolated errors and rewards overall coherence, clarity of reasoning, and effectiveness of communication. Many teachers appreciate this balance, as it supports formative instruction and mirrors authentic writing evaluation more closely than point-by-point analytic systems.

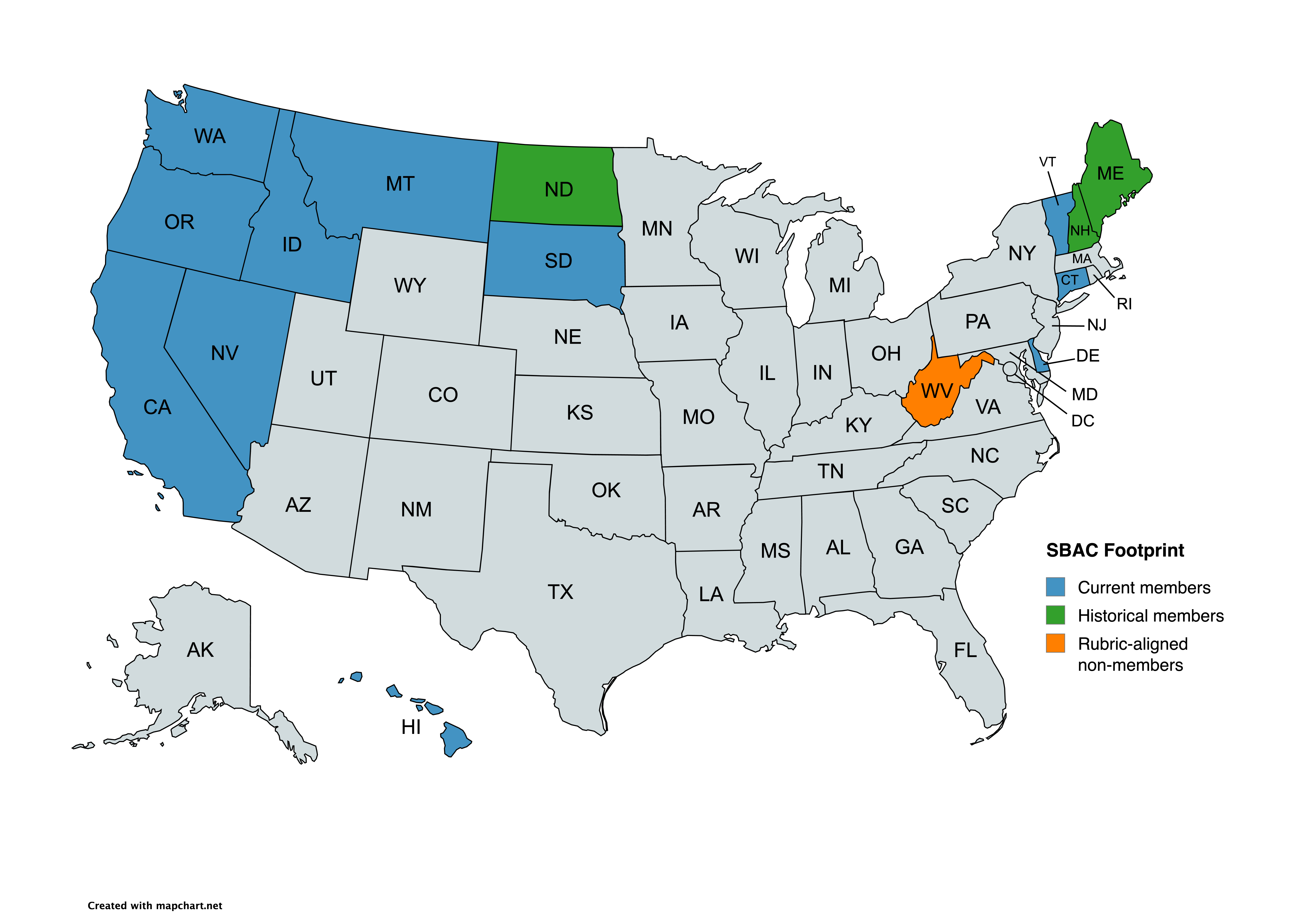

States That Use SBAC Rubrics

Many states use the Smarter Balanced test directly; others rebrand it but keep the rubrics unchanged. These include:

- California (CA)

- Connecticut (CT)

- Delaware (DE)

- Hawaii (HI)

- Idaho (ID)

- Montana (MT)

- Nevada (NV)

- South Dakota (SD)

- Vermont (VT)

- Washington (WA)

- West Virginia (WV

These rubrics often appear in different contexts—sometimes with state branding or header differences—but the scoring text is identical.

Why States Continue Using SBAC Rubrics

Even when states customize their tests or alter branding, they often keep the SBAC rubrics because:

- They align to Common Core

- Teachers are familiar with them

- They provide reliable, validated scoring frameworks

- They are comprehensive yet adaptable

This explains why a California teacher might instantly recognize a Washington rubric—and why EssayGrader maintains a master SBAC rubric set referenced across states.

4. PARCC (Partnership for Assessment of Readiness for College and Careers)

A highly influential consortium that shaped modern writing assessments.

PARCC emerged from the same national moment as SBAC but embraced a different vision of literacy development and assessment. Its guiding principles were shaped by:

- College and career readiness expectations. PARCC placed heavy emphasis on the skills required in post-secondary settings: analyzing texts, synthesizing information, and writing evidence-based arguments.

- Reading–writing integration. The consortium strongly believed writing should grow out of meaningful engagement with text. As a result, almost all PARCC writing tasks involve responding to complex passages—something reflected in its rubrics, especially the Evidence and Reading Comprehension components.

- Transparency and instructional alignment. PARCC published detailed item prototypes, practice tests, and scoring guides to help educators understand expectations. Rubrics were intentionally analytic so teachers could pinpoint specific strengths and gaps.

- Equity through rigor. PARCC aimed to reduce variability in expectations by defining a clear, high bar for student writing that would apply across participating states.

PARCC’s rubrics remain some of the most detailed in the country, intentionally breaking writing into explicit dimensions so teachers can better diagnose student performance.

The PARCC Approach: Rigor, Evidence, and College Readiness

PARCC began with an ambitious national vision: to create assessments that truly reflected the demands of college and career readiness. At the heart of PARCC’s approach was the belief that reading and writing should be inseparable—students should engage with complex texts, analyze ideas, and craft arguments grounded in evidence.

The result was a set of multi-part tasks (such as the Research Simulation and Literary Analysis Tasks) that asked students to think deeply rather than simply recall information. PARCC rubrics mirrored this philosophy with detailed analytic criteria that helped teachers pinpoint strengths and areas for growth.

Yet this rigor came with political challenges. States saw proficiency rates drop sharply under PARCC, and the consortium became entangled in broader debates about the Common Core.

While only a small number of states continue to administer PARCC or its successors, its influence persists through rubrics and writing expectations adopted—sometimes quietly—by states long after they moved away from the name.

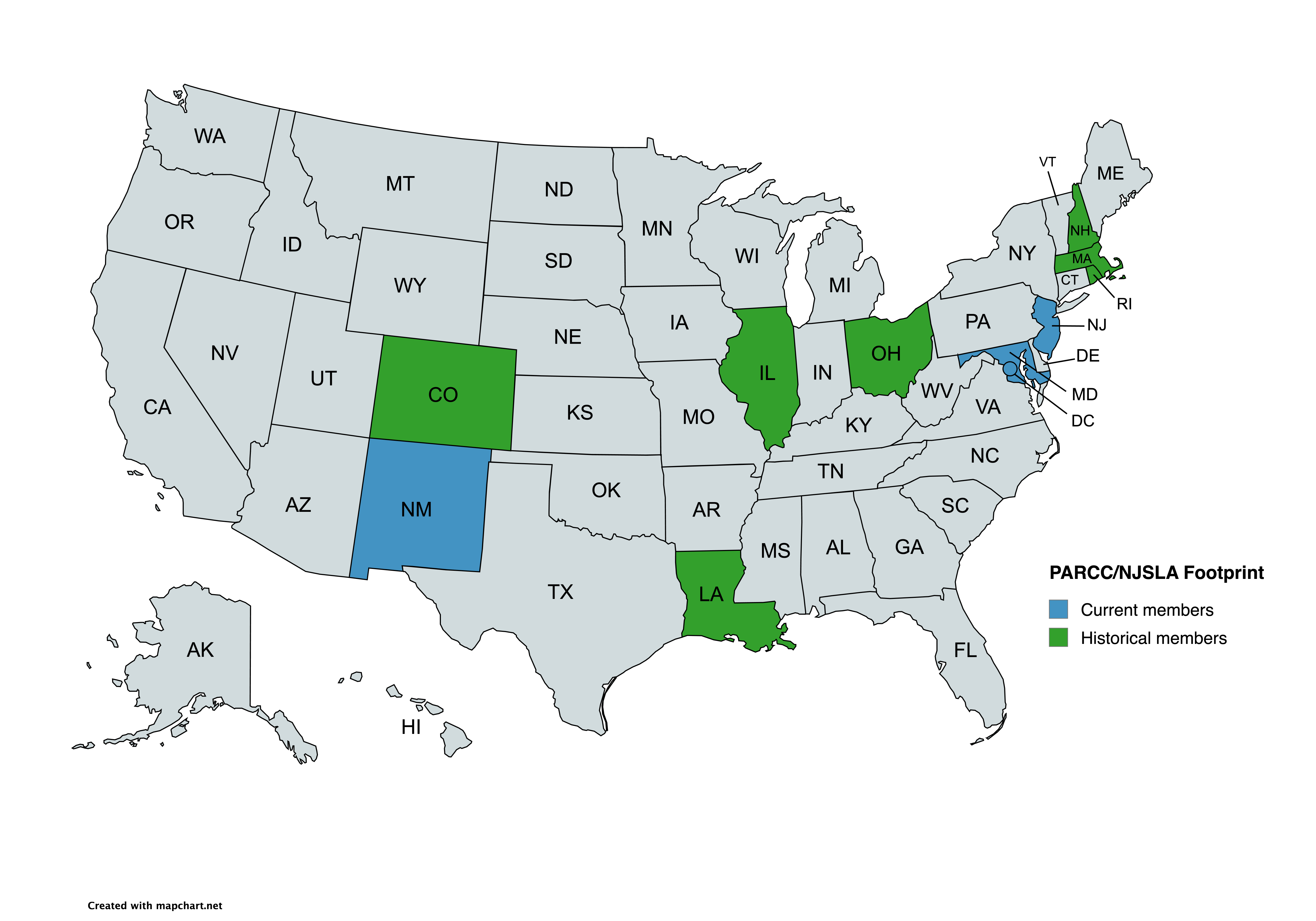

States Using PARCC-Derived Rubrics

Although PARCC as a consortium has changed, its rubric frameworks survive through:

- District of Columbia (DC CAPE / PARCC)

- New Jersey (NJSLA, which replaced PARCC but keeps the same structure)

Other states also retain PARCC DNA in their rubrics, including Maryland and New Mexico at various points in the past, but those states now maintain their own scoring guides.

Why PARCC Shrunk—but Its Influence Didn’t

PARCC’s original vision involved nationwide comparability and transparent rigor, but political pressures made it difficult for many states to sustain participation. Some governors argued the tests were too long or too difficult, while others objected to the association with federal initiatives.

But even when states withdrew, many quietly kept PARCC’s scoring frameworks because teachers found them instructionally useful. Today, PARCC’s DNA survives in several state systems—even when the acronym does not—thanks to its strong alignment with evidence-based literacy practices.

What Makes PARCC Rubrics Distinct

PARCC rubrics introduced:

- Prose Constructed Response (PCR) scoring

- Evidence-based writing expectations

- Task types like RST (Research Simulation Task) and LAT (Literary Analysis Task)

- A similar but not identical trait structure compared to SBAC

While not as widespread as SBAC rubrics, PARCC frameworks remain important for states that maintained or evolved the model.

5. MSAA (Multi-State Alternate Assessment)

Shared writing rubrics for alternate assessments.

Unlike SBAC or PARCC, MSAA focuses exclusively on alternate assessments. and was developed specifically for students with the most significant cognitive disabilities, using a profoundly different philosophical foundation from general education consortia. Its development was shaped by:

- Presuming competence. MSAA starts from the belief that all students, regardless of disability severity, can engage in academic learning and express understanding when supported appropriately.

- Reduced linguistic and cognitive load. The standards and assessments were intentionally designed to avoid overwhelming students with complex formatting, vocabulary, or multi-step demands.

- Universal Design for Learning. UDL principles guided every stage of the assessment—presentation, response options, accessibility supports, and scoring.

- Focus on meaningful communication. The writing expectations emphasize clarity, intent, and supported expression, acknowledging the broad range of communication modes used by MSAA populations.

- Individualized administration. MSAA assumes interactive and one-on-one testing, which influenced the simplified rubric criteria and the emphasis on observable behaviors.

MSAA’s writing rubrics are streamlined but purposeful: they evaluate how effectively a student can convey meaning with appropriate support, reflecting a philosophy grounded in dignity, access, and high expectations.

From NCSC to MSAA: A Continuum of Reform

MSAA didn’t appear in isolation; it emerged from earlier work by the National Center and State Collaborative (NCSC), a consortium committed to improving alternate assessments by grounding them in academic content rather than functional or life-skills–only approaches.

When NCSC concluded its federal grant cycle, MSAA continued this work under a new organizational structure, preserving the same commitment to equitable access and high expectations for students with significant cognitive disabilities. This continuity explains why MSAA rubrics feel pedagogically thoughtful and consistent across member states.

MSAA’s Foundation: Access, Dignity, and the Presumption of Competence

MSAA occupies a very different educational space, but it is grounded in principles just as ambitious as those of SBAC and PARCC. Developed for students with the most significant cognitive disabilities, MSAA grew out of a movement that emphasized the presumption of competence, equitable access, and the need for meaningful academic participation for all learners.

Its standards and assessments are designed to reduce linguistic and cognitive load while still honoring each student’s ability to communicate and demonstrate understanding. Universal Design for Learning guided its development, ensuring flexibility in how content is presented and how students respond—whether through speech, gesture, AAC devices, or other modalities.

MSAA rubrics therefore focus on clarity, intent, and supported expression rather than the traditional genre-based structures used in general education. As a result, MSAA represents one of the most thoughtful applications of accessibility and dignity in the assessment landscape.

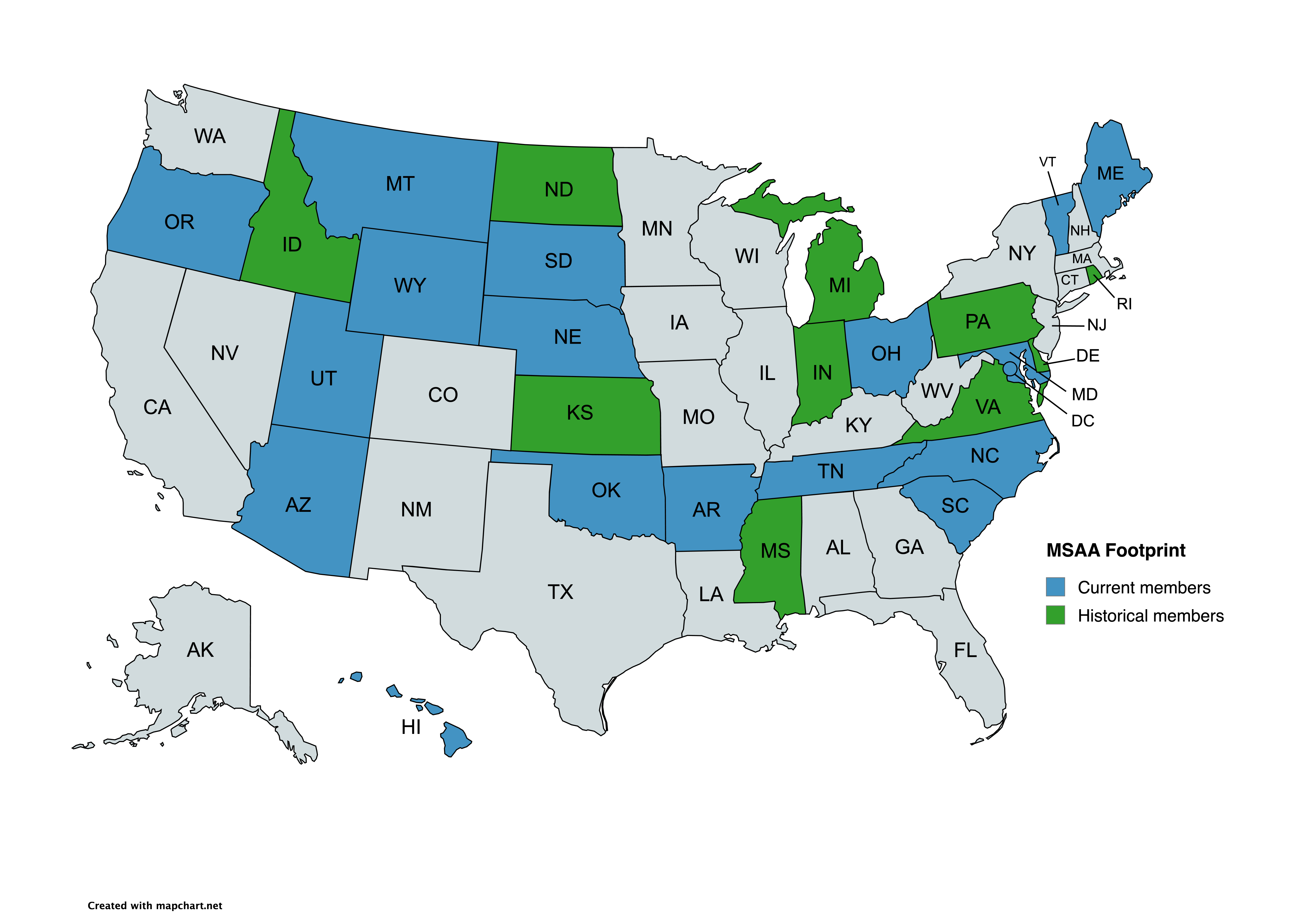

States Using MSAA Rubrics

These states currently administer the MSAA for alternate ELA and math assessment.

- Arizona (AZ)

- Arkansas (AR)

- District of Columbia (DC)

- Hawaii (HI)

- Maine (ME)

- Maryland (MD)

- Montana (MT)

- Nebraska (NE)

- North Carolina (NC)

- Ohio (OH)

- Oklahoma (OK)

- Oregon (OR)

- South Carolina (SC)

- South Dakota (SD)

- Tennessee (TN)

- Utah (UT)

- Vermont (VT)

- Wyoming (WY)

- U.S. Virgin Islands (USVI)

Others are historically aligned but not MSAA members today, including a number that were part of NCSC, not MSAA. When MSAA officially launched, they transitioned to alternate assessments developed locally or aligned with WIDA ACCESS for ELLs (Alternate ACCESS) frameworks.

MSAA Writing Rubrics

MSAA rubrics differ dramatically from SBAC or PARCC:

- They are not genre-specific (e.g., no separate Argumentative or Narrative rubrics).

- They use Level 2 and Level 3 performance criteria.

- They emphasize foundational writing behaviors and communication.

These rubrics are identical across all MSAA states.

6. ELPA21 (English Language Proficiency Assessment for the 21st Century)

A consortium focused on English learner (EL) language development.

ELPA21 is a consortium providing English Language Proficiency assessments, not general academic ELA assessments. The writing demands are therefore different. It was created in response to a need for modern, research-based English language proficiency standards aligned with CCSS but distinct from content-area assessments. Its design was shaped by:

- Language as a tool for learning. ELPA21 focuses on the language functions and forms students need to access academic content, rather than general ELA writing expectations.

- WIDA/CCSS research foundations. The consortium drew from seminal research on second-language acquisition, sociocultural learning theory, and disciplinary language.

- Clear proficiency progressions. The goal was to define what language growth looks like across levels, ensuring that teachers could see measurable movement from emerging to proficient.

- Four-domain integration. Reading, writing, listening, and speaking are treated not as isolated skills but as interdependent means of meaning-making. Tasks and rubrics reflect this convergence.

- Authentic communicative tasks. ELPA21 assessments were built to measure real academic communication—argumentation, explanation, clarification—not rote grammar or isolated vocabulary.

ELPA21’s scoring guides highlight communicative effectiveness, clarity, and command of language features, providing a distinct lens separate from general education writing rubrics.

Why ELPA21 Formed When It Did

In the early 2010s, states recognized that English learners were being assessed with tools that did not reflect the linguistic demands of modern classrooms. Many earlier English proficiency tests focused heavily on grammar accuracy or vocabulary recall—skills that did not meaningfully predict academic success.

At the same time, research was demonstrating that language learning is deeply social and disciplinary: students must use language to argue, explain, and participate in inquiry. ELPA21 arose from states that wanted an assessment aligned to this newer understanding.

While WIDA remained the dominant consortium nationally, ELPA21 offered an alternative built on collaboration among states with shared instructional priorities, especially in the Pacific Northwest and Mountain West.

ELPA21’s Design: Measuring Language Growth Across Academic Life

ELPA21 emerged from a recognition that English language proficiency assessments needed to reflect modern research on language development and the linguistic demands of contemporary classrooms.

Unlike general ELA assessments, ELPA21 was built around the idea that language is a tool for learning across all subjects—not an isolated skill. Its standards draw from second-language acquisition research and sociocultural theories of communication, defining clear progressions in how English learners develop proficiency in reading, writing, listening, and speaking.

The assessment tasks mirror real academic communication, such as explaining ideas, supporting claims, or negotiating meaning. Because of this, ELPA21 does not use genre-specific writing rubrics like SBAC or PARCC; instead, it relies on domain-based performance descriptors that reflect the broader goals of language growth.

The result is a framework that helps educators understand not just whether students can write in English, but how they use language to participate meaningfully in academic life.

States Using ELPA21

ELPA21 states include:

- Arkansas (AR)

- Iowa (IA)

- Louisiana (LA)

- Nebraska (NE)

- Oregon (OR)

- Washington (WA)

- West Virginia (WV)

What Makes ELPA21 Rubrics Different

ELPA21 writing rubrics focus on:

- Language production

- Clarity and organization

- Linguistic complexity

- Vocabulary and grammar appropriate to context

They assess writing as a language skill, not as a full ELA composition task.

7. A Landscape of Shared Origins — and Diverging Paths

Although consortium rubrics provided a shared foundation, states have taken varied paths in how they present, adapt, or replace those tools. Political climate, legislative mandates, vendor contracts, and local instructional philosophies have all shaped how assessments evolve over time.

This is why a state may continue using SBAC rubrics long after leaving the consortium—or why another may redesign its entire system while preserving the core rubric language teachers rely on. While consortia offer standardization, many states rebrand their assessments to emphasize local ownership.

For example:

- California uses SBAC but calls the overall system CAASPP.

- Washington calls theirs SBA, even though it’s Smarter Balanced.

- New Hampshire uses SAS, but its writing rubrics still match SBAC.

- Maine replaced SBAC with eMPowerME, but legacy SBAC rubrics remain relevant.

- Oregon shifted to OSAS, developing new writing rubrics distinct from SBAC.

State leaders often rebrand assessments to align with state legislation, political priorities, or revised content standards—but rewriting rubrics from scratch is costly, so many elements remain unchanged.

8. Why Consortium Rubrics Matter for Teachers

For teachers, these shared rubrics:

- Provide consistency even if they move across states

- Clarify expectations aligned to Common Core

- Support professional development across districts

- Make it easier to interpret student performance on writing tasks

But they also create confusion when:

- States rebrand tests but keep the same rubrics

- Legacy versions exist alongside newer state-specific ones

- PDF formatting differs even when rubric text is identical

This confusion is precisely why EssayGrader maintains accurate versions of consortium rubrics and clearly labels state variations.

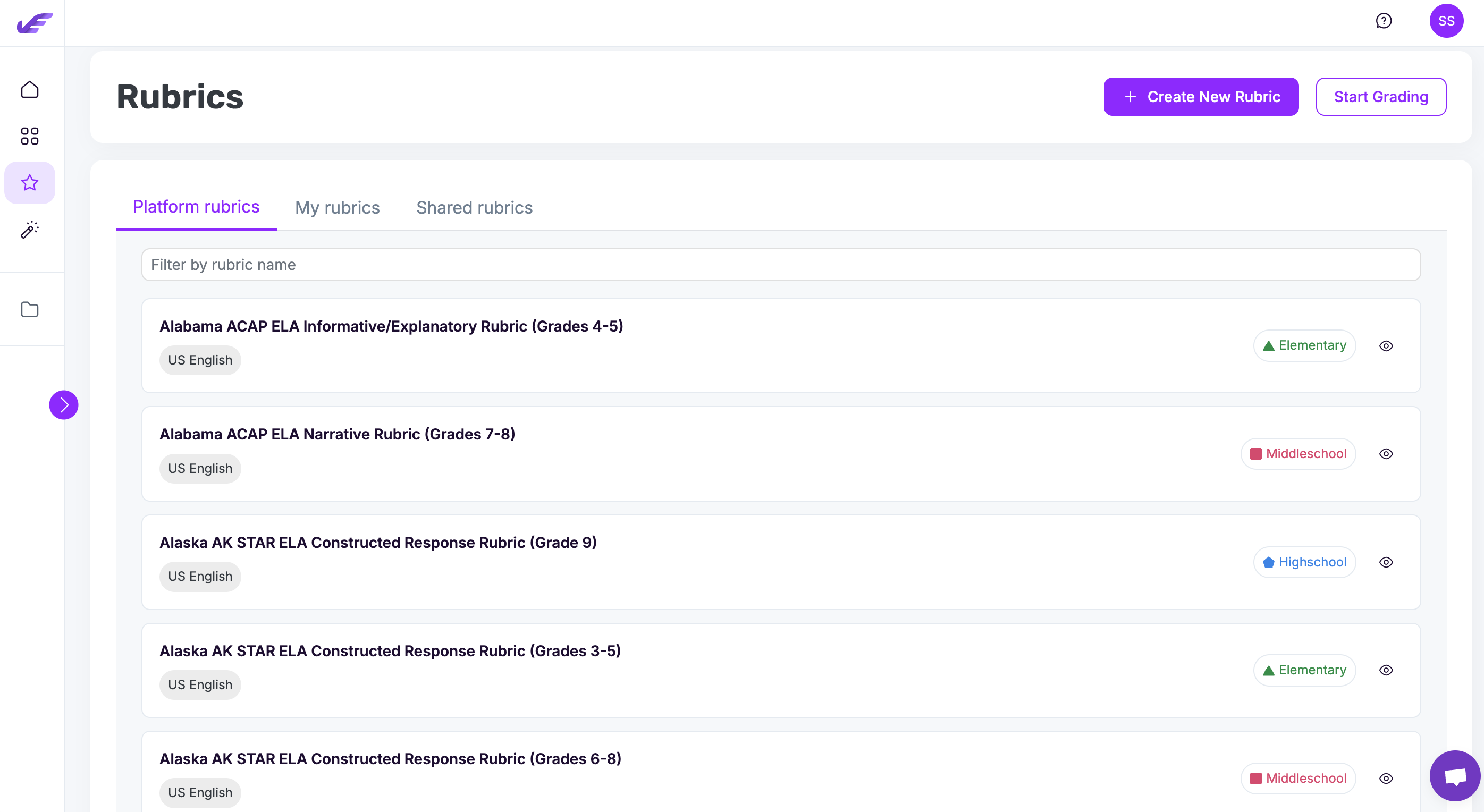

9. How EssayGrader Supports High-Quality Writing Assessment Across All Standards

The presence of multiple consortia and state-specific rubrics across the U.S. can make the writing-assessment landscape feel fragmented, but for teachers, these rubrics are not abstract policy documents—they are the tools their students are actually scored against. That is why EssayGrader is built around a simple but essential principle: teachers should be able to grade using the exact standards their schools and states expect, while also having access to the most widely respected rubric systems in the world.

With more than 500 rubrics in it's platform library, EssayGrader includes authoritative rubrics from every major U.S. consortium—SBAC, PARCC, MSAA, and ELPA21—as well as the full range of state-developed writing rubrics. This ensures that teachers in any district can evaluate writing using the framework they already know, without needing to approximate or adapt from an unrelated scoring guide. It also ensures that feedback remains aligned with the expectations used in official scoring, enabling more accurate practice, preparation, and classroom instruction.

But EssayGrader’s library extends beyond state systems. The platform also includes rubrics from IB MYP, AP, Cambridge International, and other global academic organizations whose frameworks are widely used in secondary and post-secondary environments. These rubrics provide a rich and rigorous set of models that teachers can draw from—whether to support advanced coursework or to bring internationally recognized standards into their own curriculum.

Equally important, any rubric within EssayGrader can be saved as a custom template, giving teachers a strong, research-based foundation they can modify to match the specific needs of their classroom, department, or district. This blends accuracy with adaptability: teachers begin with a validated scoring system and then refine it for genre focus, scaffolding levels, skill emphasis, or local benchmarks.

By representing all major consortia, honoring state-specific variations, and supporting customizable templates and flexible scoring, EssayGrader helps ensure that writing assessment is both faithful to official standards and flexible enough to meet real classroom needs. It is a commitment not just to convenience, but to meaningful, high-quality grading grounded in the best assessment practices across the country.

A Shared Framework With Local Flavors

Assessment consortia have shaped K–12 writing instruction for more than a decade. While the political landscape around testing continues to evolve, the rubrics remain a stable foundation for evaluating student writing.

Understanding the origins of these rubrics—where they come from, why they’re shared, and how states adapt them—helps teachers make sense of their own assessment systems and use writing rubrics more effectively.

EssayGrader’s commitment to clarity, consistency, and accuracy ensures that whether a teacher is grading a Smarter Balanced narrative task in Idaho, a PARCC-derived RST in New Jersey, an OSAS writing task in Oregon, or an MSAA alternate writing sample in Minnesota, they have the right tools at their fingertips.

.avif)

.avif)